LightTrends Newsletter

AI creates a new wave in demand for optical connectivity

LightCounting releases July 2023 Mega Datacenter Optics report

The race for AI supremacy has begun, and the fear of being left out is driving a lot of AI investment. Meta’s comment from its latest earnings call clearly illustrates this situation: “We just don't know how quickly these AI-powered features will scale, and we want to have the capacity in place in case they scale very quickly.”

How much funding is going into AI infrastructure is unknown, but Nvidia’s earnings call in April 2023 gave a hint about how large it is. Nvidia’s Q2 2023 revenue is expected to grow 50% sequentially, driven largely by sales of GPUs and GPU-based systems for AI clusters. It did not take long for the financial analysts to correlate sales of GPUs with future sales of optical transceivers and come up with very high numbers. Some of these reports estimate cumulative transceiver revenues for the next 5 years at $100 billion or more, and this is just for AI applications.

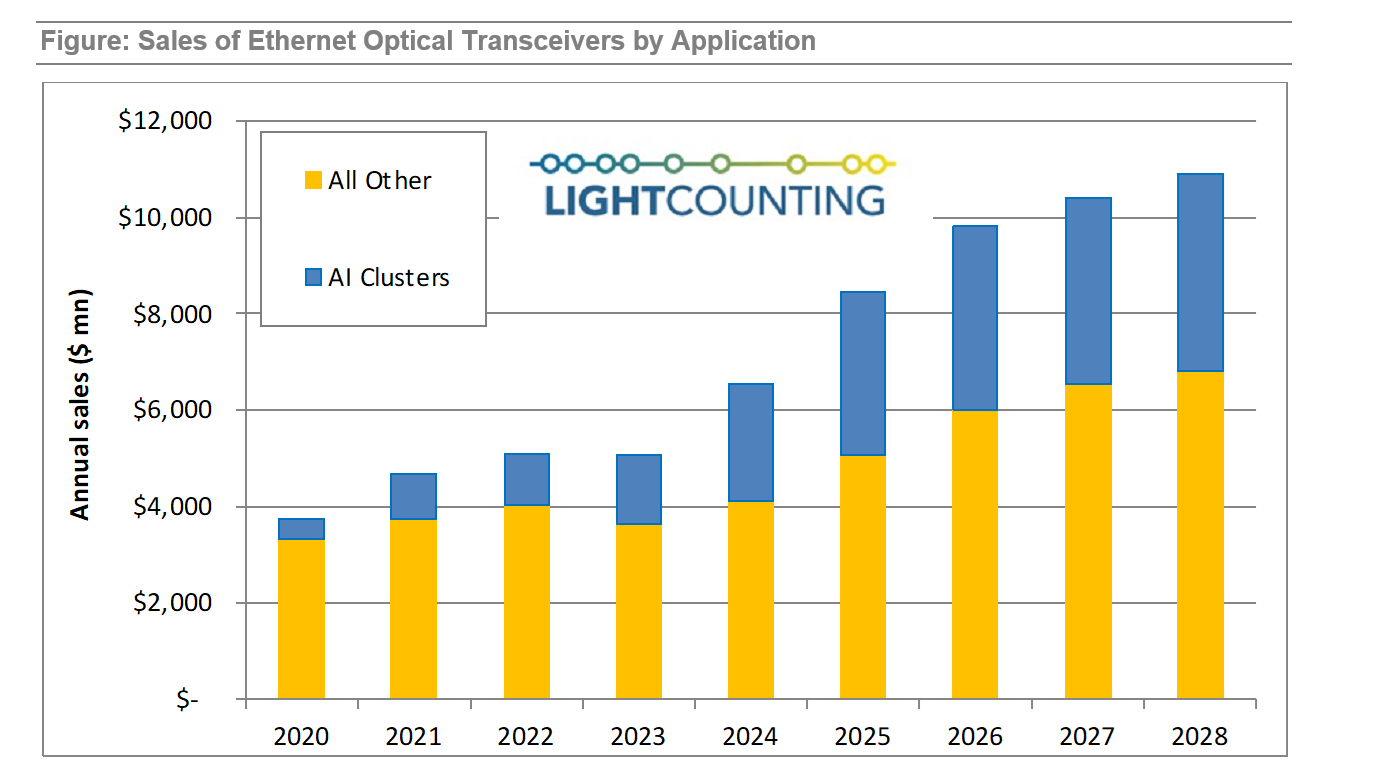

The figure below presents our forecast for sales of Ethernet optical transceivers, including an estimate for the contribution of optical connectivity for AI clusters to this market. Total sales of optical transceivers for applications in AI clusters will add up to $17.6 billion over the next 5 years – indeed a very large number, considering that all other applications of Ethernet transceivers combined will generate $28.5 billion over the same period.

This is not a complete surprise. Google reported on deploying more optics in its AI clusters than the rest of its datacenter infrastructure back in 2019-2020. We estimate that optical transceivers deployed in AI clusters already accounted for 25% of the total market in 2022 and this is not accounting for active optical cables (AOCs), which were the primary solution for optical interconnects in Nvidia systems.

What is also elevating the market share of AI is a reduction in investment in compute clusters and the optics supporting them. The timing of ChatGTP making headlines at the end of 2022 could not have been better. Fears of an upcoming economic recession and the first signs of lower growth in revenues forced all the leading Cloud companies to cut spending, including investments into datacenters and purchases of optical transceivers. Meta reduced its forecast for deployments of 200G FR4 optics in 2023 by more than 50%. In its latest financial report, the company announced another 10% reduction in capex for this year. Despite this, it plans to grow its spending in AI clusters and 400G FR4 optical transceivers supporting it.

Last, but not least: new designs of Nvidia’s AI systems require a lot more optics. The company announced NVLink chassis switches, designed with 800G optical connectivity in mind, more than a year ago. LightCounting commented on this announcement in a research note, pointing out that NVLink-enabled GPU-to-GPU connectivity requires a lot more bandwidth compared to InfiniBand networks. Current estimates suggest that it is 6x more bandwidth.

All previous designs of Nvidia’s systems used InfiniBand networks for optical connectivity and these were mostly AOCs. For example, we estimate that HDR (200G) InfiniBand systems deployed last year required more than half a million 200G AOCs. Sales of these systems are ramping up in 2023 and may require up to 1 million 200G AOCs and similar quantities of 200G copper cables.

Sales of the latest systems based on NDR (400G) InfiniBand are just starting to ramp now. These will use mostly pluggable 400G SR4 Ethernet transceivers instead of AOCs for InfiniBand connections and 800G SR8 transceivers for the NVLink network. Once deployments of the new systems become comparable to current volumes of HDR-based systems, they will need 2 million 400G SR4 transceivers (each AOC is equivalent to two transceivers) and 6 million 800G SR8 modules, assuming that NVLink networks needs 6x more bandwidth than InfiniBand. Please keep it mind that this will not happen this year, but it is likely to happen in 2024 or 2025. Not all the system will use optical InfiniBand and NVLink connectivity, which is most beneficial for very large arrays of GPUs. Many of the installations will be more modest in size and rely mostly on copper cabling.

Google is using TPUs instead of GPUs, but it is also using direct optical connectivity to TPUs to build very large arrays, reaching tens of thousands of TPUs. This is not new for Google and this is why it has been deploying so much optics in its AI clusters back in 2019-2020. Google also increased their plans for deployments of 800G FR8 transceivers recently and it is reflected in our latest forecast.

The new report presents detailed models for sales of optical Ethernet and DWDM transceivers to the top 5 US-based and top 5 Chinese Cloud companies, including: Alphabet (Google), Amazon, Apple, Meta (Facebook) and Microsoft; Alibaba, Baidu, Bytedance, Huawei and Tencent.

The Mega Data Center report is available for download by LightCounting clients.