Research Note

December 2023 Celebrating Market Opportunities in AI

Abstract

LightCounting comments on Marvell’s analyst day held December 5, 2023 at its Santa Clara, California, headquarters.

Marvell’s industry-analyst day was all about AI and new business opportunities that it offers the company, which is well placed to take advantage of the boom in AI investments. It was a pivotal decision made by the company 7 years ago, soon after Matt Murphy became CEO, which refocused Marvell’s business on emerging opportunities in data management in the Cloud.

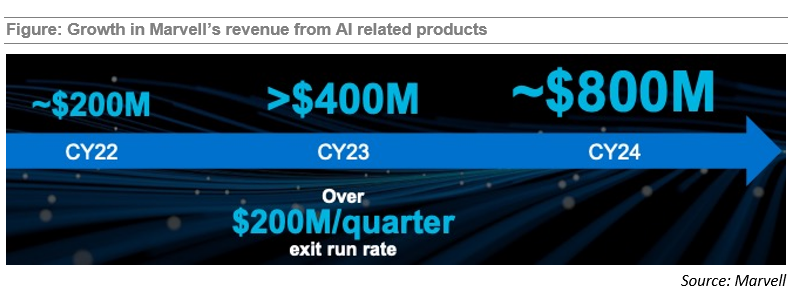

Marvell expects to double revenue from AI related products in 2023 and do it again next year, as illustrated in the figure below.

The company made several acquisitions to support this transformation. Two of them, Inphi and Innovium, made headlines across the optical communications industry in 2021. The third one may be less known, but it is as important for company’s business in AI now: Avera – a former IBM custom ASIC’s business which Marvell acquired from Global Foundries in 2019.

Marvell’s sizeable custom ASIC business has developed more than 2,000 products over the last 25+ years. This business historically focused on wired and wireless networking. More recently, however, Marvell has won hyperscaler designs for custom AI accelerators, which are on the cusp of ramping to production.

Nvidia’s GPUs are the best solution for ChatGPT now, but future improvements will rely on customized ASICs. Amazon, Google, Meta and Microsoft announced such chips recently. We estimate that TPU chips manufactured for Google, brings several billion dollars per year to Broadcom’s custom ASIC division. Marvell can do just as well with their leadership in 3nm CMOS.

Noam Mizrahi’s presentation was packed with in-depth analysis of how the AI technology may develop in the future. If you have not seen an interview with Marvell’s CTO, published by Roy Rubenstein recently, you should read it.

Noam looks beyond custom ASIC for AI, which is already here, and argues that numerous specialized AI models will develop, which can be a lot more efficient than a single general model. New applications can be built on a set of such models, if the industry finds a way to interconnect them into new “Internet of Models”. Broadband connections will be part of this solution, but it is software managing set of models as a single app, which will be the most challenging to develop.

Noam continues to promote the idea of disaggregated AI Cluster architectures and co-packaged optics enabling it in the future. In his opinion, CPO is the only option to manage future demand for high speed interconnects.

Loi Nguyen, co-founder of Inphi and SVP at Marvell, unveiled the latest silicon photonics engine at the event, which could be used for transceivers or CPO. It is based on 200G lanes and includes more than 100 elements: modulators, photodiodes, couplers, Mux/Demuxes and even capacitors and resistors. It employs 3D integration for TIA and driver chips to minimize power consumption. The integrated “light engine” is targeting <10pJ/bit power efficiency with Tbps-level bandwidth (probably 3.2T). Live demos are coming soon.

Looking at Marvell’s product offering, their guidance for doubling AI revenue in 2024 may be modest. We will wait and see.

Full text of the research note is available to LightCounting subscribers at: https://www.lightcounting.com/login